Transcoding Procedures¶

The application can use oneVPL encoding, decoding, and video processing functions together for transcoding operations. This section describes the key aspects of connecting two or more oneVPL functions together.

Asynchronous Pipeline¶

The application passes the output of an upstream oneVPL function to the input of the downstream oneVPL function to construct an asynchronous pipeline. Pipeline construction is done at runtime and can be dynamically changed, as shown in the following example:

1 2 3 4 5 6 7 8 9 | mfxSyncPoint sp_d, sp_e;

MFXVideoDECODE_DecodeFrameAsync(session,bs,work,&vin, &sp_d);

if (going_through_vpp) {

MFXVideoVPP_RunFrameVPPAsync(session,vin,vout, NULL, &sp_d);

MFXVideoENCODE_EncodeFrameAsync(session,NULL,vout,bits2,&sp_e);

} else {

MFXVideoENCODE_EncodeFrameAsync(session,NULL,vin,bits2,&sp_e);

}

MFXVideoCORE_SyncOperation(session,sp_e,INFINITE);

|

oneVPL simplifies the requirements for asynchronous pipeline synchronization. The application only needs to synchronize after the last oneVPL function. Explicit synchronization of intermediate results is not required and may slow performance.

oneVPL tracks dynamic pipeline construction and verifies dependency on input

and output parameters to ensure the execution order of the pipeline function.

In the previous example, oneVPL will ensure MFXVideoENCODE_EncodeFrameAsync()

does not begin its operation until MFXVideoDECODE_DecodeFrameAsync() or

MFXVideoVPP_RunFrameVPPAsync() has finished.

During the execution of an asynchronous pipeline, the application must consider the input data as “in use” and must not change it until the execution has completed. The application must also consider output data unavailable until the execution has finished. In addition, for encoders, the application must consider extended and payload buffers as “in use” while the input surface is locked.

oneVPL checks dependencies by comparing the input and output parameters of each oneVPL function in the pipeline. Do not modify the contents of input and output parameters before the previous asynchronous operation finishes. Doing so will break the dependency check and can result in undefined behavior. An exception occurs when the input and output parameters are structures, in which case overwriting fields in the structures is allowed.

Note

The dependency check works on the pointers to the structures only.

There are two exceptions with respect to intermediate synchronization:

If the input is from any asynchronous operation, the application must synchronize any input before calling the oneVPL

MFXVideoDECODE_DecodeFrameAsync()function.When the application calls an asynchronous function to generate an output surface in video memory and passes that surface to a non-oneVPL component, it must explicitly synchronize the operation before passing the surface to the non-oneVPL component.

Surface Pool Allocation¶

When connecting API function A to API function B, the application must take into account the requirements of both functions to calculate the number of frame surfaces in the surface pool. Typically, the application can use the formula Na+Nb, where Na is the frame surface requirements for oneVPL function A output, and Nb is the frame surface requirements for oneVPL function B input.

For performance considerations, the application must submit multiple operations and delay synchronization as much as possible, which gives oneVPL flexibility to organize internal pipelining. For example, compare the following two operation sequences, where the first sequence is the recommended order:

![digraph {

rankdir=LR;

labelloc="t";

label="Operation sequence 1";

f1 [shape=record label="ENCODE(F1)" ];

f2 [shape=record label="ENCODE(F2)" ];

f3 [shape=record label="SYNC(F1)" ];

f4 [shape=record label="SYNC(F2)" ];

f1->f2->f3->f4;

}](../../../../_images/graphviz-1d3aa857b4784f656d4eac77e4266e8349f08e34.png)

Recommended operation sequence¶

![digraph {

rankdir=LR;

labelloc="t";

label="Operation sequence 2";

f1 [shape=record label="ENCODE(F1)" ];

f2 [shape=record label="ENCODE(F2)" ];

f3 [shape=record label="SYNC(F1)" ];

f4 [shape=record label="SYNC(F2)" ];

f1->f3->f2->f4;

}](../../../../_images/graphviz-5a3d1fe20eb74b213cfce550b4f5465cdbfc9178.png)

Operation sequence - not recommended¶

In this example, the surface pool needs additional surfaces to take into account

multiple asynchronous operations before synchronization. The application can use

the mfxVideoParam::AsyncDepth field to inform a oneVPL function of

the number of asynchronous operations the application plans to perform before

synchronization. The corresponding oneVPL QueryIOSurf function will reflect

this number in the mfxFrameAllocRequest::NumFrameSuggested

value. The following example shows a way of calculating the surface needs based

on mfxFrameAllocRequest::NumFrameSuggested values:

1 2 3 4 5 6 7 8 9 10 11 12 13 | mfxVideoParam init_param_v, init_param_e;

mfxFrameAllocRequest response_v[2], response_e;

// Desired depth

mfxU16 async_depth=4;

init_param_v.AsyncDepth=async_depth;

MFXVideoVPP_QueryIOSurf(session, &init_param_v, response_v);

init_param_e.AsyncDepth=async_depth;

MFXVideoENCODE_QueryIOSurf(session, &init_param_e, &response_e);

mfxU32 num_surfaces= response_v[1].NumFrameSuggested

+response_e.NumFrameSuggested

-async_depth; /* double counted in ENCODE & VPP */

|

Pipeline Error Reporting¶

During asynchronous pipeline construction, each pipeline stage function will return a synchronization point (sync point). These synchronization points are useful in tracking errors during the asynchronous pipeline operation.

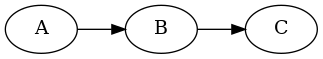

For example, assume the following pipeline:

The application synchronizes on sync point C. If the error occurs in

function C, then the synchronization returns the exact error code. If the

error occurs before function C, then the synchronization returns

mfxStatus::MFX_ERR_ABORTED. The application can then try to

synchronize on sync point B. Similarly, if the error occurs in function B,

the synchronization returns the exact error code, or else

mfxStatus:: MFX_ERR_ABORTED. The same logic applies if the

error occurs in function A.