Encoding Procedures¶

There are two methods for shared memory allocation and handling in oneVPL: external and internal.

External Memory¶

The following pseudo code shows the encoding procedure with external memory (legacy mode):

1MFXVideoENCODE_QueryIOSurf(session, &init_param, &request);

2allocate_pool_of_frame_surfaces(request.NumFrameSuggested);

3MFXVideoENCODE_Init(session, &init_param);

4sts=MFX_ERR_MORE_DATA;

5for (;;) {

6 if (sts==MFX_ERR_MORE_DATA && !end_of_stream()) {

7 find_unlocked_surface_from_the_pool(&surface);

8 fill_content_for_encoding(surface);

9 }

10 surface2=end_of_stream()?NULL:surface;

11 sts=MFXVideoENCODE_EncodeFrameAsync(session,NULL,surface2,bits,&syncp);

12 if (end_of_stream() && sts==MFX_ERR_MORE_DATA) break;

13 // Skipped other error handling

14 if (sts==MFX_ERR_NONE) {

15 MFXVideoCORE_SyncOperation(session, syncp, INFINITE);

16 do_something_with_encoded_bits(bits);

17 }

18}

19MFXVideoENCODE_Close(session);

20free_pool_of_frame_surfaces();

Note the following key points about the example:

The application uses the

MFXVideoENCODE_QueryIOSurf()function to obtain the number of working frame surfaces required for reordering input frames.The application calls the

MFXVideoENCODE_EncodeFrameAsync()function for the encoding operation. The input frame must be in an unlocked frame surface from the frame surface pool. If the encoding output is not available, the function returns themfxStatus::MFX_ERR_MORE_DATAstatus code to request additional input frames.Upon successful encoding, the

MFXVideoENCODE_EncodeFrameAsync()function returnsmfxStatus::MFX_ERR_NONE. At this point, the encoded bitstream is not yet available because theMFXVideoENCODE_EncodeFrameAsync()function is asynchronous. The application must use theMFXVideoCORE_SyncOperation()function to synchronize the encoding operation before retrieving the encoded bitstream.At the end of the stream, the application continuously calls the

MFXVideoENCODE_EncodeFrameAsync()function with a NULL surface pointer to drain any remaining bitstreams cached within the oneVPL encoder, until the function returnsmfxStatus::MFX_ERR_MORE_DATA.

Note

It is the application’s responsibility to fill pixels outside of the crop window when it is smaller than the frame to be encoded, especially in cases when crops are not aligned to minimum coding block size (16 for AVC and 8 for HEVC and VP9).

Internal Memory¶

The following pseudo code shows the encoding procedure with internal memory:

1MFXVideoENCODE_Init(session, &init_param);

2sts=MFX_ERR_MORE_DATA;

3for (;;) {

4 if (sts==MFX_ERR_MORE_DATA && !end_of_stream()) {

5 MFXMemory_GetSurfaceForEncode(session,&surface);

6 fill_content_for_encoding(surface);

7 }

8 surface2=end_of_stream()?NULL:surface;

9 sts=MFXVideoENCODE_EncodeFrameAsync(session,NULL,surface2,bits,&syncp);

10 if (surface2) surface->FrameInterface->Release(surface2);

11 if (end_of_stream() && sts==MFX_ERR_MORE_DATA) break;

12 // Skipped other error handling

13 if (sts==MFX_ERR_NONE) {

14 MFXVideoCORE_SyncOperation(session, syncp, INFINITE);

15 do_something_with_encoded_bits(bits);

16 }

17}

18MFXVideoENCODE_Close(session);

There are several key differences in this example, compared to external memory (legacy mode):

The application does not need to call the

MFXVideoENCODE_QueryIOSurf()function to obtain the number of working frame surfaces since allocation is done by oneVPL.The application calls the

MFXMemory_GetSurfaceForEncode()function to get a free surface for the subsequent encode operation.The application must call the

mfxFrameSurfaceInterface::Releasefunction to decrement the reference counter of the obtained surface after the call to theMFXVideoENCODE_EncodeFrameAsync()function.

Configuration Change¶

The application changes configuration during encoding by calling the

MFXVideoENCODE_Reset() function. Depending on the difference in

configuration parameters before and after the change, the oneVPL encoder will

either continue the current sequence or start a new one. If the encoder starts a

new sequence, it completely resets internal state and begins a new sequence with

the IDR frame.

The application controls encoder behavior during parameter change by attaching

the mfxExtEncoderResetOption structure to the

mfxVideoParam structure during reset. By using this structure, the

application instructs the encoder to start or not start a new sequence after

reset. In some cases, the request to continue the current sequence cannot be

satisfied and the encoder will fail during reset. To avoid this scenario, the

application may query the reset outcome before the actual reset by calling the

MFXVideoENCODE_Query() function with the

mfxExtEncoderResetOption attached to the

mfxVideoParam structure.

The application uses the following procedure to change encoding configurations:

The application retrieves any cached frames in the oneVPL encoder by calling the

MFXVideoENCODE_EncodeFrameAsync()function with a NULL input frame pointer until the function returnsmfxStatus::MFX_ERR_MORE_DATA.The application calls the

MFXVideoENCODE_Reset()function with the new configuration:If the function successfully sets the configuration, the application can continue encoding as usual.

If the new configuration requires a new memory allocation, the function returns

mfxStatus::MFX_ERR_INCOMPATIBLE_VIDEO_PARAM. The application must close the oneVPL encoder and reinitialize the encoding procedure with the new configuration.

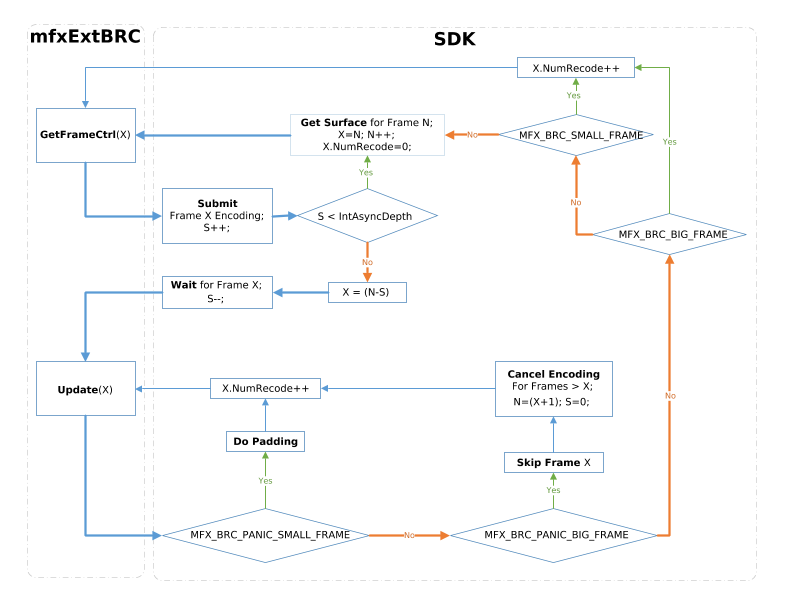

External Bitrate Control¶

The application can make the encoder use the external Bitrate Control (BRC)

instead of the native bitrate control. To make the encoder use the external BRC,

the application should attach the mfxExtCodingOption2 structure

with ExtBRC = MFX_CODINGOPTION_ON and the mfxExtBRC callback

structure to the mfxVideoParam structure during encoder

initialization. The Init, Reset, and Close callbacks will be invoked

inside their corresponding functions: MFXVideoENCODE_Init(),

MFXVideoENCODE_Reset(), and MFXVideoENCODE_Close(). The

following figure shows asynchronous encoding flow with external BRC (using

GetFrameCtrl and Update):

Asynchronous encoding flow with external BRC¶

Note

IntAsyncDepth is the oneVPL max internal asynchronous encoding

queue size. It is always less than or equal to

mfxVideoParam::AsyncDepth.

The following pseudo code shows use of the external BRC:

1 #include "mfxvideo.h"

2 #include "mfxbrc.h"

3

4 typedef struct {

5 mfxU32 EncodedOrder;

6 mfxI32 QP;

7 mfxU32 MaxSize;

8 mfxU32 MinSize;

9 mfxU16 Status;

10 mfxU64 StartTime;

11 // ... skipped

12 } MyBrcFrame;

13

14 typedef struct {

15 MyBrcFrame* frame_queue;

16 mfxU32 frame_queue_size;

17 mfxU32 frame_queue_max_size;

18 mfxI32 max_qp[3]; //I,P,B

19 mfxI32 min_qp[3]; //I,P,B

20 // ... skipped

21 } MyBrcContext;

22

23 void* GetExtBuffer(mfxExtBuffer** ExtParam, mfxU16 NumExtParam, mfxU32 bufferID)

24 {

25 int i=0;

26 for(i = 0; i < NumExtParam; i++) {

27 if(ExtParam[i]->BufferId == bufferID) return ExtParam[i];

28 }

29 return NULL;

30 }

31

32 static int IsParametersSupported(mfxVideoParam *par)

33 {

34 UNUSED_PARAM(par);

35 // do some checks

36 return 1;

37 }

38

39 static int IsResetPossible(MyBrcContext* ctx, mfxVideoParam *par)

40 {

41 UNUSED_PARAM(ctx);

42 UNUSED_PARAM(par);

43 // do some checks

44 return 1;

45 }

46

47 static MyBrcFrame* GetFrame(MyBrcFrame *frame_queue, mfxU32 frame_queue_size, mfxU32 EncodedOrder)

48 {

49 UNUSED_PARAM(EncodedOrder);

50 //do some logic

51 if(frame_queue_size) return &frame_queue[0];

52 return NULL;

53 }

54

55 static mfxU32 GetFrameCost(mfxU16 FrameType, mfxU16 PyramidLayer)

56 {

57 UNUSED_PARAM(FrameType);

58 UNUSED_PARAM(PyramidLayer);

59 // calculate cost

60 return 1;

61 }

62

63 static mfxU32 GetMinSize(MyBrcContext *ctx, mfxU32 cost)

64 {

65 UNUSED_PARAM(ctx);

66 UNUSED_PARAM(cost);

67 // do some logic

68 return 1;

69 }

70

71 static mfxU32 GetMaxSize(MyBrcContext *ctx, mfxU32 cost)

72 {

73 UNUSED_PARAM(ctx);

74 UNUSED_PARAM(cost);

75 // do some logic

76 return 1;

77 }

78

79 static mfxI32 GetInitQP(MyBrcContext *ctx, mfxU32 MinSize, mfxU32 MaxSize, mfxU32 cost)

80 {

81 UNUSED_PARAM(ctx);

82 UNUSED_PARAM(MinSize);

83 UNUSED_PARAM(MaxSize);

84 UNUSED_PARAM(cost);

85 // do some logic

86 return 1;

87 }

88

89 static mfxU64 GetTime()

90 {

91 mfxU64 wallClock = 0xFFFF;

92 return wallClock;

93 }

94

95 static void UpdateBRCState(mfxU32 CodedFrameSize, MyBrcContext *ctx)

96 {

97 UNUSED_PARAM(CodedFrameSize);

98 UNUSED_PARAM(ctx);

99 return;

100 }

101

102 static void RemoveFromQueue(MyBrcFrame* frame_queue, mfxU32 frame_queue_size, MyBrcFrame* frame)

103 {

104 UNUSED_PARAM(frame_queue);

105 UNUSED_PARAM(frame_queue_size);

106 UNUSED_PARAM(frame);

107 return;

108 }

109

110 static mfxU64 GetMaxFrameEncodingTime(MyBrcContext *ctx)

111 {

112 UNUSED_PARAM(ctx);

113 return 2;

114 }

115

116 mfxStatus MyBrcInit(mfxHDL pthis, mfxVideoParam* par) {

117 MyBrcContext* ctx = (MyBrcContext*)pthis;

118 mfxI32 QpBdOffset;

119 mfxExtCodingOption2* co2;

120 mfxI32 defaultQP = 4;

121

122 if (!pthis || !par)

123 return MFX_ERR_NULL_PTR;

124

125 if (!IsParametersSupported(par))

126 return MFX_ERR_UNSUPPORTED;

127

128 ctx->frame_queue_max_size = par->AsyncDepth;

129 ctx->frame_queue = (MyBrcFrame*)malloc(sizeof(MyBrcFrame) * ctx->frame_queue_max_size);

130

131 if (!ctx->frame_queue)

132 return MFX_ERR_MEMORY_ALLOC;

133

134 co2 = (mfxExtCodingOption2*)GetExtBuffer(par->ExtParam, par->NumExtParam, MFX_EXTBUFF_CODING_OPTION2);

135 QpBdOffset = (par->mfx.FrameInfo.BitDepthLuma > 8) ? (6 * (par->mfx.FrameInfo.BitDepthLuma - 8)) : 0;

136

137 ctx->max_qp[0] = (co2 && co2->MaxQPI) ? (co2->MaxQPI - QpBdOffset) : defaultQP;

138 ctx->min_qp[0] = (co2 && co2->MinQPI) ? (co2->MinQPI - QpBdOffset) : defaultQP;

139

140 ctx->max_qp[1] = (co2 && co2->MaxQPP) ? (co2->MaxQPP - QpBdOffset) : defaultQP;

141 ctx->min_qp[1] = (co2 && co2->MinQPP) ? (co2->MinQPP - QpBdOffset) : defaultQP;

142

143 ctx->max_qp[2] = (co2 && co2->MaxQPB) ? (co2->MaxQPB - QpBdOffset) : defaultQP;

144 ctx->min_qp[2] = (co2 && co2->MinQPB) ? (co2->MinQPB - QpBdOffset) : defaultQP;

145

146 // skipped initialization of other other BRC parameters

147

148 ctx->frame_queue_size = 0;

149

150 return MFX_ERR_NONE;

151 }

152

153 mfxStatus MyBrcReset(mfxHDL pthis, mfxVideoParam* par) {

154 MyBrcContext* ctx = (MyBrcContext*)pthis;

155

156 if (!pthis || !par)

157 return MFX_ERR_NULL_PTR;

158

159 if (!IsParametersSupported(par))

160 return MFX_ERR_UNSUPPORTED;

161

162 if (!IsResetPossible(ctx, par))

163 return MFX_ERR_INCOMPATIBLE_VIDEO_PARAM;

164

165 // reset here BRC parameters if required

166

167 return MFX_ERR_NONE;

168 }

169

170 mfxStatus MyBrcClose(mfxHDL pthis) {

171 MyBrcContext* ctx = (MyBrcContext*)pthis;

172

173 if (!pthis)

174 return MFX_ERR_NULL_PTR;

175

176 if (ctx->frame_queue) {

177 free(ctx->frame_queue);

178 ctx->frame_queue = NULL;

179 ctx->frame_queue_max_size = 0;

180 ctx->frame_queue_size = 0;

181 }

182

183 return MFX_ERR_NONE;

184 }

185

186 mfxStatus MyBrcGetFrameCtrl(mfxHDL pthis, mfxBRCFrameParam* par, mfxBRCFrameCtrl* ctrl) {

187 MyBrcContext* ctx = (MyBrcContext*)pthis;

188 MyBrcFrame* frame = NULL;

189 mfxU32 cost;

190

191 if (!pthis || !par || !ctrl)

192 return MFX_ERR_NULL_PTR;

193

194 if (par->NumRecode > 0)

195 frame = GetFrame(ctx->frame_queue, ctx->frame_queue_size, par->EncodedOrder);

196 else if (ctx->frame_queue_size < ctx->frame_queue_max_size)

197 frame = &ctx->frame_queue[ctx->frame_queue_size++];

198

199 if (!frame)

200 return MFX_ERR_UNDEFINED_BEHAVIOR;

201

202 if (par->NumRecode == 0) {

203 frame->EncodedOrder = par->EncodedOrder;

204 cost = GetFrameCost(par->FrameType, par->PyramidLayer);

205 frame->MinSize = GetMinSize(ctx, cost);

206 frame->MaxSize = GetMaxSize(ctx, cost);

207 frame->QP = GetInitQP(ctx, frame->MinSize, frame->MaxSize, cost); // from QP/size stat

208 frame->StartTime = GetTime();

209 }

210

211 ctrl->QpY = frame->QP;

212

213 return MFX_ERR_NONE;

214 }

215

216 #define DEFAULT_QP_INC 4

217 #define DEFAULT_QP_DEC 4

218

219 mfxStatus MyBrcUpdate(mfxHDL pthis, mfxBRCFrameParam* par, mfxBRCFrameCtrl* ctrl, mfxBRCFrameStatus* status) {

220 MyBrcContext* ctx = (MyBrcContext*)pthis;

221 MyBrcFrame* frame = NULL;

222 mfxU32 panic = 0;

223

224 if (!pthis || !par || !ctrl || !status)

225 return MFX_ERR_NULL_PTR;

226

227 frame = GetFrame(ctx->frame_queue, ctx->frame_queue_size, par->EncodedOrder);

228 if (!frame)

229 return MFX_ERR_UNDEFINED_BEHAVIOR;

230

231 // update QP/size stat here

232

233 if ( frame->Status == MFX_BRC_PANIC_BIG_FRAME

234 || frame->Status == MFX_BRC_PANIC_SMALL_FRAME)

235 panic = 1;

236

237 if (panic || (par->CodedFrameSize >= frame->MinSize && par->CodedFrameSize <= frame->MaxSize)) {

238 UpdateBRCState(par->CodedFrameSize, ctx);

239 RemoveFromQueue(ctx->frame_queue, ctx->frame_queue_size, frame);

240 ctx->frame_queue_size--;

241 status->BRCStatus = MFX_BRC_OK;

242

243 // Here update Min/MaxSize for all queued frames

244

245 return MFX_ERR_NONE;

246 }

247

248 panic = ((GetTime() - frame->StartTime) >= GetMaxFrameEncodingTime(ctx));

249

250 if (par->CodedFrameSize > frame->MaxSize) {

251 if (panic || (frame->QP >= ctx->max_qp[0])) {

252 frame->Status = MFX_BRC_PANIC_BIG_FRAME;

253 } else {

254 frame->Status = MFX_BRC_BIG_FRAME;

255 frame->QP = DEFAULT_QP_INC;

256 }

257 }

258

259 if (par->CodedFrameSize < frame->MinSize) {

260 if (panic || (frame->QP <= ctx->min_qp[0])) {

261 frame->Status = MFX_BRC_PANIC_SMALL_FRAME;

262 status->MinFrameSize = frame->MinSize;

263 } else {

264 frame->Status = MFX_BRC_SMALL_FRAME;

265 frame->QP = DEFAULT_QP_DEC;

266 }

267 }

268

269 status->BRCStatus = frame->Status;

270

271 return MFX_ERR_NONE;

272 }

273

274 void EncoderInit()

275 {

276 //initialize encoder

277 MyBrcContext brc_ctx;

278 mfxExtBRC ext_brc;

279 mfxExtCodingOption2 co2;

280 mfxExtBuffer* ext_buf[2] = {&co2.Header, &ext_brc.Header};

281 mfxVideoParam vpar;

282

283 memset(&brc_ctx, 0, sizeof(MyBrcContext));

284 memset(&ext_brc, 0, sizeof(mfxExtBRC));

285 memset(&co2, 0, sizeof(mfxExtCodingOption2));

286

287 vpar.ExtParam = ext_buf;

288 vpar.NumExtParam = sizeof(ext_buf) / sizeof(ext_buf[0]);

289

290 co2.Header.BufferId = MFX_EXTBUFF_CODING_OPTION2;

291 co2.Header.BufferSz = sizeof(mfxExtCodingOption2);

292 co2.ExtBRC = MFX_CODINGOPTION_ON;

293

294 ext_brc.Header.BufferId = MFX_EXTBUFF_BRC;

295 ext_brc.Header.BufferSz = sizeof(mfxExtBRC);

296 ext_brc.pthis = &brc_ctx;

297 ext_brc.Init = MyBrcInit;

298 ext_brc.Reset = MyBrcReset;

299 ext_brc.Close = MyBrcClose;

300 ext_brc.GetFrameCtrl = MyBrcGetFrameCtrl;

301 ext_brc.Update = MyBrcUpdate;

302

303 sts = MFXVideoENCODE_Query(session, &vpar, &vpar);

304 if (sts == MFX_ERR_UNSUPPORTED || co2.ExtBRC != MFX_CODINGOPTION_ON)

305 // unsupported case

306 sts = sts;

307 else

308 sts = MFXVideoENCODE_Init(session, &vpar);

309 }

JPEG¶

The application can use the same encoding procedures for JPEG/motion JPEG encoding, as shown in the following pseudo code:

// encoder initialization

MFXVideoENCODE_Init (...);

// single frame/picture encoding

MFXVideoENCODE_EncodeFrameAsync (...);

MFXVideoCORE_SyncOperation(...);

// close down

MFXVideoENCODE_Close(...);

The application may specify Huffman and quantization tables during encoder

initialization by attaching mfxExtJPEGQuantTables and

mfxExtJPEGHuffmanTables buffers to the mfxVideoParam

structure. If the application does not define tables, then the oneVPL encoder

uses tables recommended in ITU-T* Recommendation T.81. If the application does

not define a quantization table it must specify the

mfxInfoMFX::Quality parameter. In this case, the oneVPL encoder

scales the default quantization table according to the specified

mfxInfoMFX::Quality parameter value.

The application should properly configure chroma sampling format and color

format using the mfxFrameInfo::FourCC and

mfxFrameInfo::ChromaFormat fields. For example, to encode a 4:2:2

vertically sampled YCbCr picture, the application should set

mfxFrameInfo::FourCC to MFX_FOURCC_YUY2 and

mfxFrameInfo::ChromaFormat to

MFX_CHROMAFORMAT_YUV422V. To encode a 4:4:4 sampled RGB

picture, the application should set mfxFrameInfo::FourCC to

MFX_FOURCC_RGB4 and mfxFrameInfo::ChromaFormat

to MFX_CHROMAFORMAT_YUV444.

The oneVPL encoder supports different sets of chroma sampling and color formats

on different platforms. The application must call the

MFXVideoENCODE_Query() function to check if the required color format

is supported on a given platform and then initialize the encoder with proper

values of mfxFrameInfo::FourCC and

mfxFrameInfo::ChromaFormat.

The application should not define the number of scans and number of components.

These numbers are derived by the oneVPL encoder from the

mfxInfoMFx::Interleaved flag and from chroma type. If interleaved

coding is specified, then one scan is encoded that contains all image

components. Otherwise, the number of scans is equal to number of components.

The encoder uses the following component IDs: “1” for luma (Y), “2” for chroma

Cb (U), and “3” for chroma Cr (V).

The application should allocate a buffer that is big enough to hold the encoded picture. A rough upper limit may be calculated using the following equation where Width and Height are width and height of the picture in pixel and BytesPerPx is the number of bytes for one pixel:

BufferSizeInKB = 4 + (Width * Height * BytesPerPx + 1023) / 1024;

The equation equals 1 for a monochrome picture, 1.5 for NV12 and YV12 color formats, 2 for YUY2 color format, and 3 for RGB32 color format (alpha channel is not encoded).

Multi-view Video Encoding¶

Similar to the decoding and video processing initialization procedures, the

application attaches the mfxExtMVCSeqDesc structure to the

mfxVideoParam structure for encoding initialization. The

mfxExtMVCSeqDesc structure configures the oneVPL MVC encoder to

work in three modes:

Default dependency mode: The application specifies

mfxExtMVCSeqDesc::NumViewand all other fields to zero. The oneVPL encoder creates a single operation point with all views (view identifier 0 : NumView-1) as target views. The first view (view identifier 0) is the base view. Other views depend on the base view.Explicit dependency mode: The application specifies

mfxExtMVCSeqDesc::NumViewand the view dependency array, and sets all other fields to zero. The oneVPL encoder creates a single operation point with all views (view identifier View[0 : NumView-1].ViewId) as target views. The first view (view identifier View[0].ViewId) is the base view. View dependencies are defined asmfxMVCViewDependencystructures.Complete mode: The application fully specifies the views and their dependencies. The oneVPL encoder generates a bitstream with corresponding stream structures.

During encoding, the oneVPL encoding function

MFXVideoENCODE_EncodeFrameAsync() accumulates input frames until

encoding of a picture is possible. The function returns

mfxStatus::MFX_ERR_MORE_DATA for more data at input or

mfxStatus::MFX_ERR_NONE if it successfully accumulated enough

data for encoding a picture. The generated bitstream contains the complete

picture (multiple views). The application can change this behavior and instruct

the encoder to output each view in a separate bitstream buffer. To do so, the

application must turn on the mfxExtCodingOption::ViewOutput flag.

In this case, the encoder returns

mfxStatus::MFX_ERR_MORE_BITSTREAM if it needs more bitstream

buffers at output and mfxStatus::MFX_ERR_NONE when processing

of the picture (multiple views) has been finished. It is recommended that the

application provide a new input frame each time the oneVPL encoder requests a

new bitstream buffer. The application must submit view data for encoding in the

order they are described in the mfxExtMVCSeqDesc structure.

Particular view data can be submitted for encoding only when all views that it

depends upon have already been submitted.

The following pseudo code shows the encoding procedure:

1mfxExtBuffer *eb;

2mfxExtMVCSeqDesc seq_desc;

3mfxVideoParam init_param;

4

5init_param.ExtParam=(mfxExtBuffer **)&eb;

6init_param.NumExtParam=1;

7eb=(mfxExtBuffer *)&seq_desc;

8

9/* init encoder */

10MFXVideoENCODE_Init(session, &init_param);

11

12/* perform encoding */

13for (;;) {

14 MFXVideoENCODE_EncodeFrameAsync(session, NULL, surface2, bits,

15 &syncp);

16 MFXVideoCORE_SyncOperation(session,syncp,INFINITE);

17}

18

19/* close encoder */

20MFXVideoENCODE_Close(session);